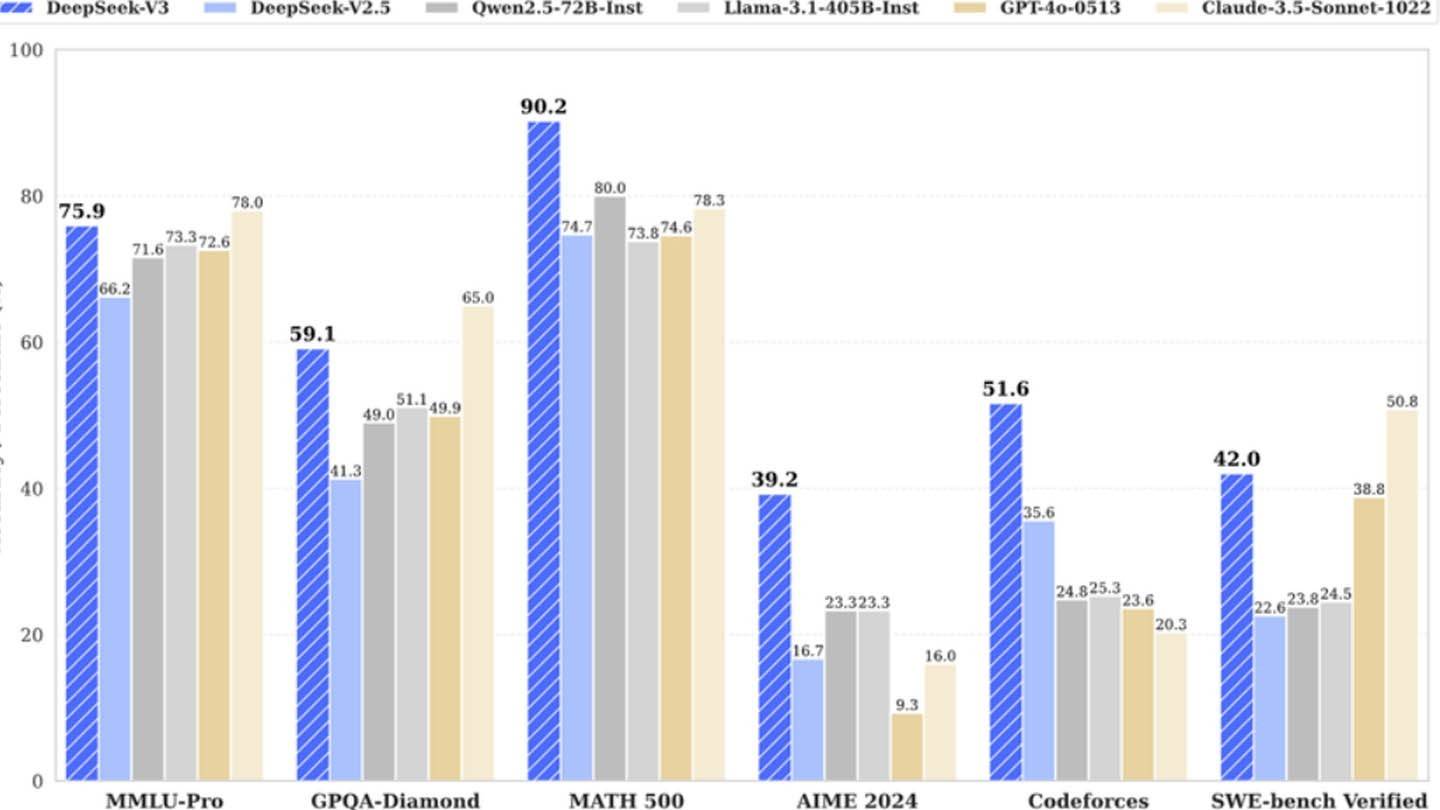

DeepSeek's surprisingly affordable AI model challenges industry giants. The company claims to have trained its powerful DeepSeek V3 neural network for a mere $6 million, utilizing only 2048 GPUs, a stark contrast to competitors' expenditures. However, this figure only reflects pre-training GPU costs, omitting substantial research, refinement, data processing, and infrastructure expenses.

Image: ensigame.com

Image: ensigame.com

DeepSeek's innovative technology distinguishes it. Key features include Multi-token Prediction (MTP) for simultaneous word prediction, Mixture of Experts (MoE) leveraging 256 neural networks for enhanced processing, and Multi-head Latent Attention (MLA) for improved information extraction. These advancements contribute to the model's accuracy and efficiency.

Image: ensigame.com

Image: ensigame.com

Contrary to the publicized $6 million figure, SemiAnalysis reveals DeepSeek operates a massive infrastructure of approximately 50,000 Nvidia Hopper GPUs, valued at roughly $1.6 billion, with operational costs reaching $944 million. This substantial investment, coupled with high salaries for its researchers (exceeding $1.3 million annually), attracts top talent from Chinese universities. The company's self-funded nature and streamlined structure contribute to its agility and rapid innovation.

Image: ensigame.com

Image: ensigame.com

While DeepSeek's "budget-friendly" claim is misleading, its overall investment of over $500 million in AI development, combined with its technical breakthroughs and skilled workforce, allows it to compete effectively. A comparison of training costs further highlights this: DeepSeek's R1 cost $5 million, while ChatGPT 4 reportedly cost $100 million, demonstrating a significant cost advantage, even considering DeepSeek's actual investment.

Image: ensigame.com

Image: ensigame.com

DeepSeek's success underscores the potential of well-funded, independent AI companies to challenge established players. However, its achievements are rooted in substantial investment, technological advancements, and a highly skilled team, making the initial "low-cost" narrative an oversimplification.

Home

Home  Navigation

Navigation

Latest Articles

Latest Articles

Latest Games

Latest Games

![Faded Bonds – Version 0.1 [Whispering Studios]](https://imgs.xddxz.com/uploads/28/1719578752667eb080ac522.jpg)